Why your tests are failing and how to combat unreliable automation

If you have previously created automated test scripts, or are in the early stages of creating a new solution. You have likely come across situations where things, for lack of a better word. Brake, and seemingly for no easily explainable reason.

Either they have previously passed and are now failing. Failing inconsistently. Or possibly never passing, but getting closer to the end with every attempted run that you make.

And despite the temptation to immediately rerun any test that may have failed and chalk up anything that doesn’t meet your expected results to ‘one of those things’. You really need to take the time to understand what caused the failure to occur. Put in the steps in place to prevent it from happening in the future, and you” come away with a more reliable and effective test automation suite.

Why are my tests failing?

I know what it’s like when you think you have coded the perfect test case and are convinced that nothing could go wrong.

Pressing the start button in your IDE. You sit back with a smug expression on your face as the test begins to run without error. Then after a minute of successful assertions, it hits a point in the test and just… hangs there.

Huh? But it was coded perfectly! You see no mistake in the logic or the assertions you are making. You then try running it again, and this time… it passes without error.

So what is going on? What causes some tests to pass sometimes. But later on, the same test to fail under seemingly identical conditions?

The biggest problem for an automation engineer and is the cause for more failing automation tests since trying to automate a web application in Internet Explorer 11 can be boiled down to one distinct attribute.

Change.

The expectations you have coded into your automated tests (certain elements being present, for example). Are not being met because of a change in the web page’s structure. Or a delay to the objects appearing in the DOM because of network conditions and your tests having no alternative than to relay a timeout exception error.

The changes can originate from multiple sources and most of the time, it might not even be your code that it is the problem (in that you are missing a pesky bracket or semi-colon). But more of the fact that the automation tool isn’t ‘seeing’ what a human user can.

Luckily, there are tools that we can make use of to aid the process of discovering that source of these errors as simple as possible.

Browser developer tools are your best friend

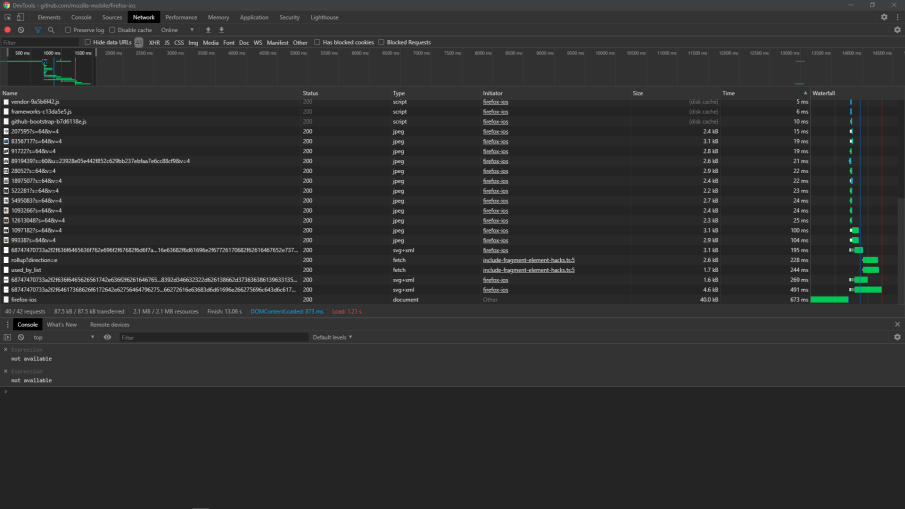

One of the best tools that you can use to see when elements are loaded onto the page, what network requests are being called, and technical details such as the required cookies or expected header information for requests, exist in the browser that you are using to read this blog post.

Each of the major browsers come with a built-in set of powerful features which I spend more time analysing when putting together a new automation framework than the IDE I’m working in. I want to know the in’s and out’s of the problem I am trying to solve so that the solution I come up with will serve it’s intended purpose.

I often think of the following quote when I confront a fresh problem that needs solving.

The quality of everything human beings do, everything – everything – depends on the quality of the thinking we do first.

I know that it might seem a bit intimidating to open up the developer tools initially and start rummaging around. But once you know the purpose of each tab along the top (hint: the most important ones for dealing with web applications are Console, Network and Elements). You will soon discover how each can help in debugging common issues that affect many of the headaches that afflict test automation engineers.

If you need a quick primer on the use of the Dev Tools in Chrome. I recommend taking the time to review Googles own documentation.

Is an API being requested?

Depending on the application you are working with. It’s likely to be relying on outside sources to render dynamic content. A list of products for a shopping application for example. Or a list of registered users will all need to be fetched from a backend database in order to be rendered on the webpage.

If any assertions are relying on that content to be present before continuing. A potential delay in the API returning a 200 status code is likely to affect the subsequent test code and could result in an easily avoidable error from being returned.

If you are using Selenium for example. You can use the built-in WebDriverWait ability to wait for your dynamic content to be present on the page:

WebElement someContent= (new WebDriverWait(driver, 8))

.until(ExpectedConditions.presenceOfElementLocated(By.id("someContent")));Or in Cypress, you can use the cy.requests() & the cy.wait() feature to wait for network responses before continuing.

cy.server()

cy.route('api/*').as('api')

cy.visit('http://localhost:8888/')

cy.wait('@api')

cy.get('h1').should('contain', 'Hello World')Automated testing is an investment of resources for any company to make, with the most valuable resource being time. Time that if not spent in exercising reliable automated test strategies. Will result in a loss of confidence and potential disastrous bugs being missed, or misinterpreted with every failed test case that you rerun.

A failed test case should be a trigger to investigate what is wrong. Maybe you need to put in some additional assertions or perform another step prior to moving on in your script. Rerunning a case may prove that your test works some of the time, But if you aren’t investigating failures and debugging problems. Then you aren’t getting the full benefit of your automated test tool.

I hope you found this post useful. If there’s a Test Automation topic you have a question on or another Software Testing topic you would like to discuss. Reach out to me on Twitter, LinkedIn, or via my contact form.

[…] Why your tests are failing and how to combat unreliable automation Written by: Kevin Tuck […]